Radiant Process Analysis

A Concept in Action for Understanding DevOps and the Humans behind it

The Idea

Imagine a device that could parse historical behavior and highlight what matters most. In Isaac Asimov’s Foundation book series, that device is the Prime Radiant, used to model humanity via psychohistory, and predict the fate of civilization. I don’t have ambitions that grand, I’m mostly concerned with the fate of a repository or two. But by watching what actually happens in development workflows over time, it’s possible to spot friction, anticipate regressions, and surface opportunities for improvement before they escalate in a way that raw metrics can’t.

Observing repos and pipelines in this way surfaces actionable insights. It’s not a replacement for metrics or dashboards, but another lens on workflow behavior, capturing subtleties that aggregate numbers often obscure. Failures, bottlenecks, or slowdowns can occur hourly, daily, or asymmetrically, and high level summaries often miss these signals. The underlying hypothesis is simple: workflow behavior, observed directly, contains enough structure to reason about improvement without collapsing everything into a single number.

Traditional Frameworks

DORA is good. SPACE is good. They’ve both continue to contribute meaningfully to how teams think about engineering effectiveness. And they also come with costs and assumptions baked-in.

Both frameworks require upfront investment: surveys, calibration, shared definitions, and agreement on what terms like “deployment,” “lead time,” or “productivity” even mean in a given organization. That work is feasible in mature environments where processes are stable and roles are well-defined.

In startups or rapidly changing teams, those assumptions tend to fracture if they can even come into focus to begin with. Teams ship in irregular bursts. On-call rotations are ad hoc. The same person might write code, review PRs, and deploy in the same afternoon. Seasonality exists, but it’s rarely clean or symmetric. Under those conditions, metric driven approaches often lag reality or require constant redefinition to stay relevant. And even then, finding the time to strategically analyze the data and propose changes often falls low on the broader priority list.

There’s also an unavoidable abstraction cost. Frameworks like DORA, SPACE, or STAR reduce human behavior into metrics, which is useful, but it strips away context that often matters most: stress around incidents, confidence when shipping, the quiet friction of reviews that stall, or the cognitive tax of repeatedly failing CI. These human signals are embedded in technical systems, but they rarely map cleanly to a score or percentile. Teams can look healthy on paper while accumulating frustration or latent risk underneath.

Radiant Process Analysis doesn’t compete with these frameworks. It sidesteps some of their fragility by observing what actually happened, preserving narrative and pattern alongside measurement rather than requiring agreement upfront on what should be measured. It’s another way to look at the same system, particularly useful when formal measurement is expensive or prematurely rigid. With this flexibility comes other bonuses too - Goodhart’s Law becomes a non-issue and teams can focus more on the spirit of continuous improvement instead of mandating minute processes, for example.

An Exemplar

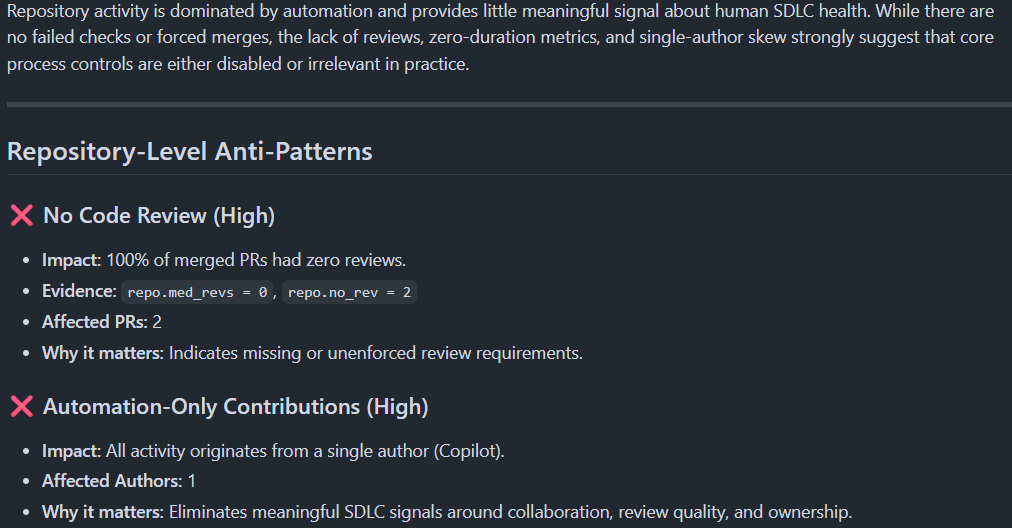

To explore this idea, I built Radiant Process Analysis. It mines PRs, CI results, merges, reverts, and workflow runs, then condenses the data into high signal patterns. Over time, what counts as “signal” evolves and expands as both inferencing and data signals grow in quality and quantity. It “radiates” out recommendations for the future, in the context of themes and recommendations, to avoid incidents and process based regressions. This implementation is just one exemplar, designed to show how a workflow can be observed, not to prescribe a permanent set of checks.

I see this as part of what defines a strong DevOps engineer. One of the oft-unspoken duties is noticing subtle friction points, inferring where processes falter, and nudging the workflow toward smoother operation. Radiant Process represents a practical step toward automating that kind of observation. It doesn’t replace judgment. It reduces the cognitive load required to exercise it well.

Patterns That Matter

Some examples illustrate the kind of signals that emerge:

PRs without meaningful descriptions tend to take longer to review.

Repeated first run CI failures on certain branches hint at gaps in local testing or overlooked dependencies.

PRs that are force-pushed after review often indicate friction between authors and reviewers.

None of these are universal truths. They’re contextual patterns. But seeing them consistently, over time, provides evidence about where attention might be well spent, or where behavior may be changing due to shifts in priorities.

Technical Approach

Octokit makes the GitHub API calls straightforward, allowing collection of PRs, commits, CI results, merges, reverts, and workflow runs without additional infrastructure. Copilot handles the AI summarization.

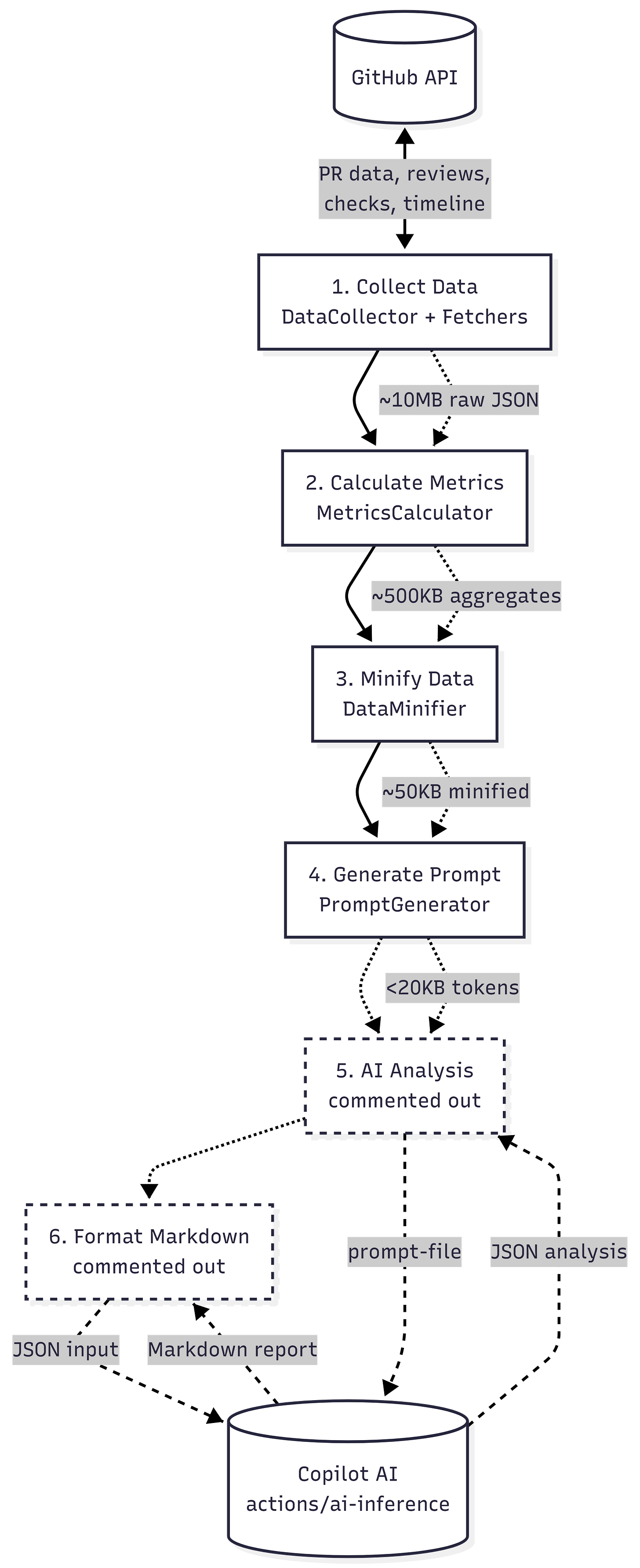

I initially experimented with MCP, but strict input limits and ballooned context made it a poor fit for this kind of longitudinal analysis, even with I strictly limited its available tools. GitHub Actions paired with actions/ai-inference worked better, and the built-in model routing behind Copilot makes for a functional, drop-in deployment model. The workflow uses a two-phase process: first mining and pruning raw data, then passing a highly condensed representation to the model.

In the future, as token limits for the ai-inference action ease, more of this work can shift to large language models and MCP tools, reducing reliance on pre-selected data and hard constraints. That said, deciding what belongs in traditional REST or GraphQL calls versus MCP-driven inference will remain both an art and a science as advances continue in new design patterns.

The output is plain language summaries and recommendations that are inspectable, auditable, and diffable. A logic flow diagram makes the system explicit. Nothing is hidden. The analysis is intentionally time bounded and repo-relative, so it evolves alongside the work itself. However, these reports can easily be exported and checked into a central reporting repository for a more estate-based approach, due to the modularity of its GitHub Action.

This approach is still a work-in-progress after some rate limiting setbacks I faced. By the time I had solved my context window issues, GitHub had brandished me as adversarial, and I am still waiting to be released from 429 code prison. When I am freed, this post will be updated with links to the GitHub repository and a demo.

Why This Kind of Observation Pays Off (I hope)

DevOps wins are categorically force multiplied:

Saving a few minutes per pull request compounds across a team. Fewer unnecessary CI runs reduce spend and tighten feedback loops. Clearer workflows lead to more predictable releases and higher confidence in what ships. During incidents, reliable deployments and rollbacks directly reduce recovery time and operator stress.

These improvements rarely show up in a single headline metric. They emerge from sustained reductions in friction across day-to-day work. Observational approaches like this are useful because they surface those patterns early, before inefficiencies harden into default behavior. It can be an early warning sign to changes in team dynamics, shifts in pressure, compounding stress levels, or just flaky tests. Because issues can stem from any number of underlying problems, the way we look at symptoms has to be fluid and flexible.

The broader goal is practical humanism through technology: using systems to increase understanding of how work actually happens, not to police it. By examining concrete signals in repository activity, it becomes possible to spot sources of friction and address them while they are still cheap and easiest to fix.

This is not about predicting teams or optimizing people. It is about understanding the current state of a repository well enough to keep it healthy, so engineers can spend more time on judgment, creativity, and shipping reliable systems.